Attacking Windows Platform with EternalBlue Exploit via Android Phones | MS17-010

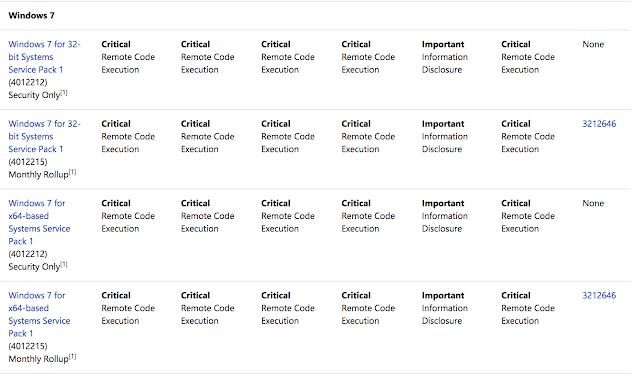

Introduction On 14 April 2017, a hacker group know by the name of Shadow Brokers leaked exploitation toolkit used by the National Security Agency (NSA). The leak was also used as part of a worldwide WannaCry ransomware attack. EternalBlue is also an exploit developed and used by the NSA according to former NSA employees. Lab Environment Target Machine: Windows 7 Ultimate x64 bit Attacker Machine: Android 5.1 What is EternalBlue EternalBlue actually exploits a vulnerability found in Server Message Block (SMB) protocol of Microsoft Windows various platforms. This vulnerability can be found under CVE-2017-0144 in the CVE catalog.The most severe of the vulnerabilities could allow remote code execution if an attacker sends specially crafted messages to a Microsoft Server Message Block 1.0 (SMBv1) server. Windows 7 Operating with Release Effected by EternalBlue Installing Metasploit Framework on Android Step 1: Download Termux from play store....

Comments

Post a Comment